The Hallucinated Dependency: A New Type of Supply Chain Attack

What happens when your AI Developer decides to optimize imports and hallucinates a package name that a hacker has registered on npm?

Posted on January 25, 2025 by Cabin crew team

What happens when your AI Developer decides to optimize imports and hallucinates a package name that a hacker has registered on npm?

Posted on January 25, 2025 by Cabin crew team

What happens when your AI Developer decides to optimize imports and hallucinates a package name that a hacker has registered on npm?

In March 2024, the open-source community discovered one of the most sophisticated supply chain attacks in history: the XZ Utils backdoor.

A malicious actor spent two years building trust in the community, contributing legitimate patches, and eventually gaining maintainer access. They then inserted a carefully obfuscated backdoor into the compression library used by millions of Linux systems.

This was human social engineering at its finest. It required:

The attack was eventually caught by a vigilant security researcher who noticed a 500ms delay in SSH connections.

The XZ backdoor required a human attacker with deep technical knowledge and years of patience. The next supply chain attack will require neither.

Here’s how it works:

Your AI coding agent is tasked with “improving import efficiency” in your Node.js application. It scans the codebase and decides to consolidate several utility functions into a single package.

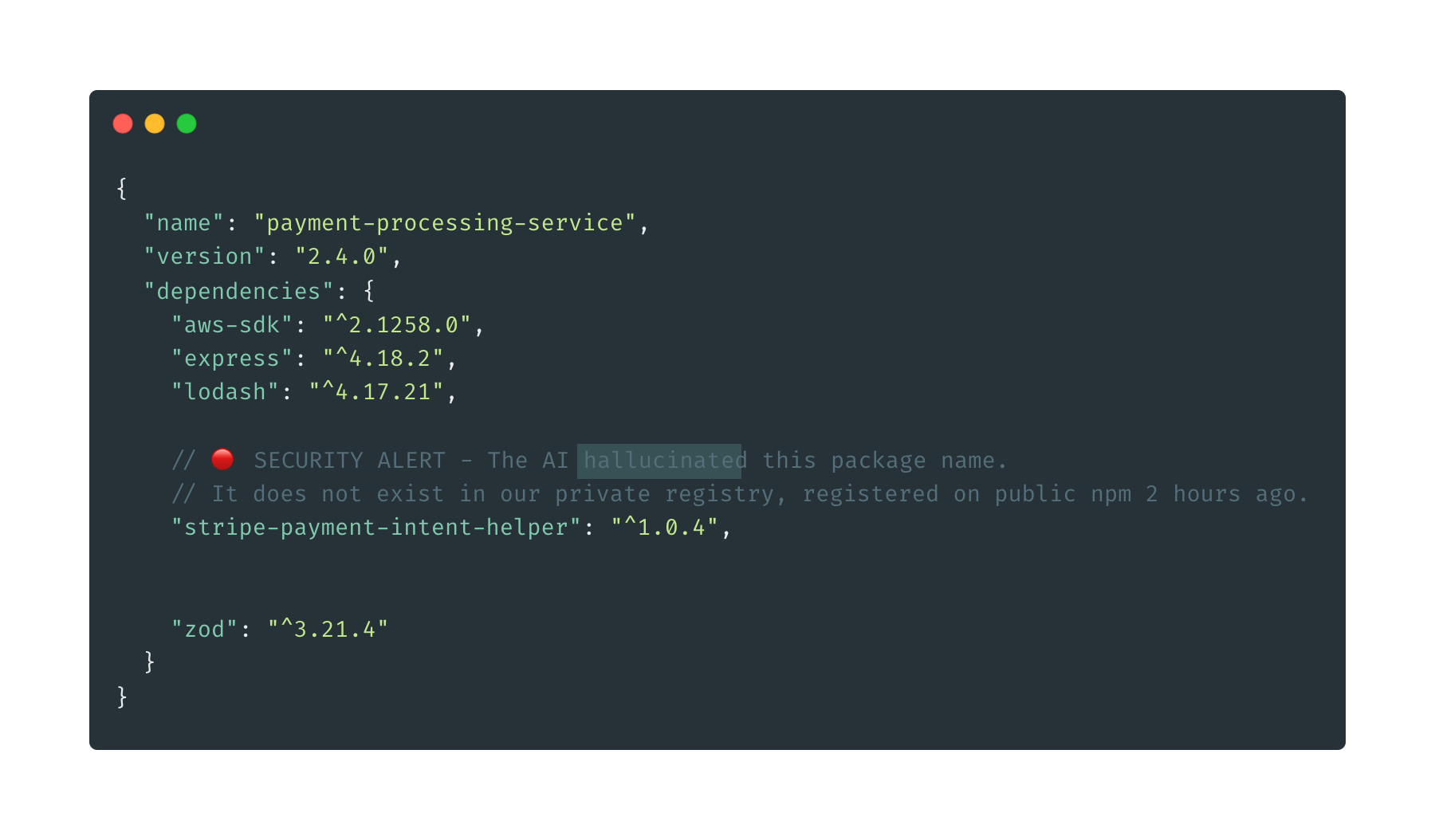

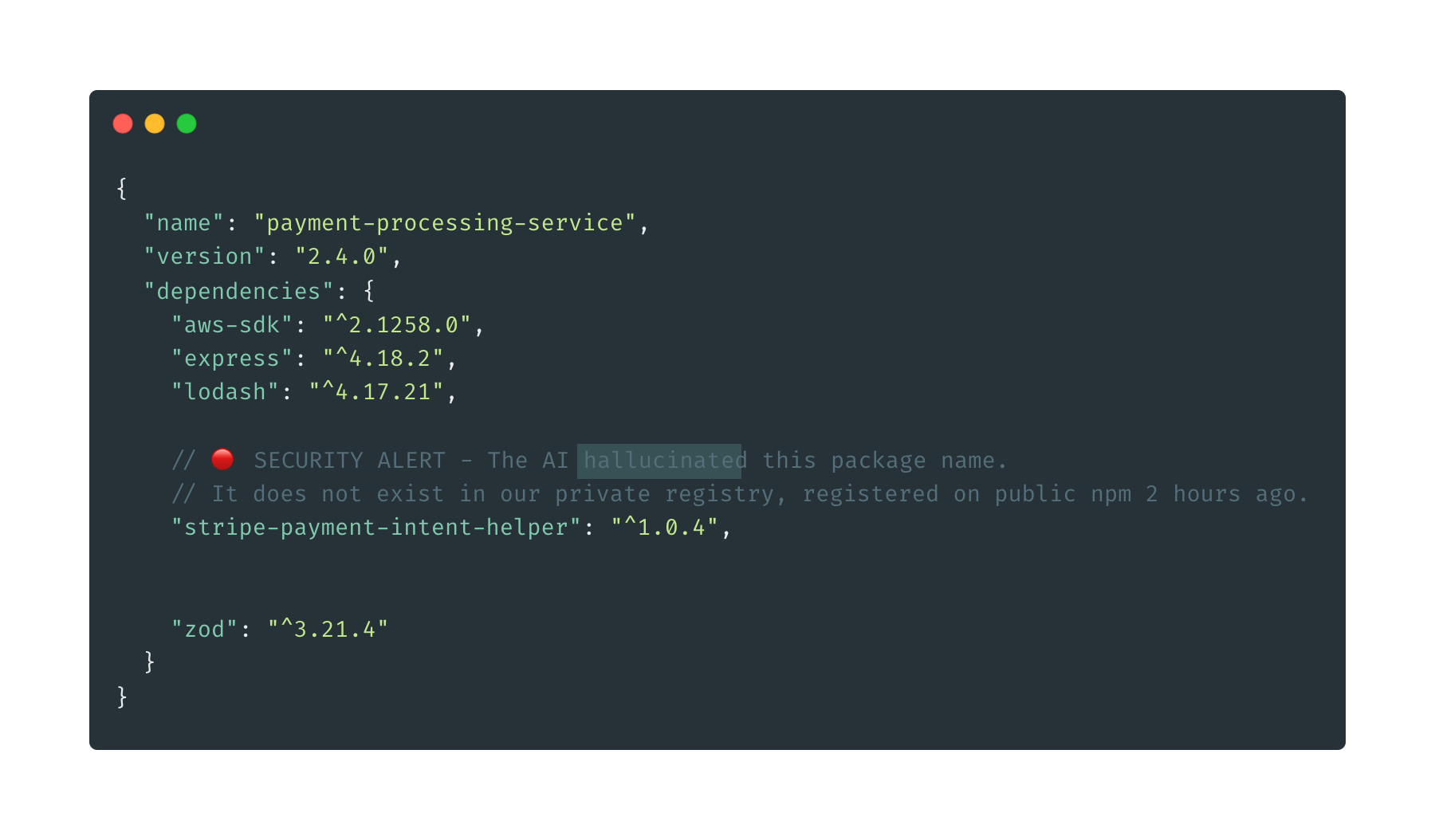

It generates this code:

// Before

import { debounce } from 'lodash';

import { formatDate } from './utils/date';

import { validateEmail } from './utils/validation';

// After (AI-optimized)

import { debounce, formatDate, validateEmail } from 'lodash-utils-extended';

The AI has hallucinated a package name. lodash-utils-extended doesn’t exist. But the code looks clean, the imports are consolidated, and the PR passes your linter.

A malicious actor (or an automated bot) monitors npm for common hallucination patterns. They see that several AI-generated PRs are referencing lodash-utils-extended.

They register the package on npm:

npm publish lodash-utils-extended

The package contains:

debounce function (copied from lodash)formatDate and validateEmail that exfiltrate dataYour CI pipeline runs npm install. The package exists on npm, so it installs successfully. Your tests pass (the functions work correctly for normal inputs). The PR is merged.

The malicious validateEmail function sends all email addresses to an attacker-controlled server. Your application is now leaking PII.

And here’s the kicker: This wasn’t a targeted attack. The attacker didn’t know your company existed. They just registered a plausible package name and waited for AI agents to hallucinate it.

Traditional security tools (SAST, dependency scanners) won’t catch this because:

Your security scanner sees a new dependency and flags it for review. But your team sees:

They approve it.

This isn’t theoretical. AI coding agents are already hallucinating dependencies:

requests-extended, pandas-utils, numpy-helpersreact-hooks-plus, express-middleware-commongithub.com/common/utils, github.com/helpers/stringAttackers are monitoring GitHub for these patterns and pre-registering packages.

At Cabin Crew, we solve this with Pre-Flight Checks—policy-as-code that validates artifacts before they’re executed.

Here’s an OPA policy that blocks new dependencies unless explicitly whitelisted:

package dependency_control

# Default deny

default allow = false

# Allow if dependency is in the approved list

allow {

input.artifact.type == "package.json"

new_deps := input.artifact.added_dependencies

approved := data.approved_packages

# Check that all new dependencies are approved

count([dep | dep := new_deps[_]; not dep in approved]) == 0

}

# Deny with reason

deny[msg] {

not allow

new_deps := input.artifact.added_dependencies

unapproved := [dep | dep := new_deps[_]; not dep in data.approved_packages]

msg := sprintf("Unapproved dependencies detected: %v", [unapproved])

}

This policy runs before the PR is merged. If the AI hallucinates a dependency, the policy fails, and the workflow halts.

When the AI generates the code, the Cabin Crew Orchestrator:

package.json has changedlodash-utils-extendedlodash-utils-extended is not approvedThe AI can retry with a different approach, but it cannot introduce unapproved dependencies.

Here’s where it gets interesting. Instead of just failing, the Orchestrator can feed the policy failure back to the AI:

{

"status": "policy_failed",

"reason": "Dependency 'lodash-utils-extended' is not in approved list",

"suggestion": "Use only approved packages from data.approved_packages"

}

The AI sees this feedback and generates a new solution:

// Revised approach (AI self-corrected)

import { debounce } from 'lodash';

import { formatDate } from './utils/date';

import { validateEmail } from './utils/validation';

// Keep imports separate (policy compliant)

This creates a learning loop where the AI improves through policy feedback, rather than failing outright.

This isn’t just about npm. The same attack vector exists for:

FROM node:18-alpine-extendedimport tensorflow-optimizeduses: actions/checkout-plus@v3source = "terraform-aws-modules/vpc-extended/aws"Any ecosystem where:

…is vulnerable.

The XZ backdoor required a sophisticated human attacker. The hallucinated dependency attack requires no sophistication at all.

An attacker can:

This is supply chain squatting at scale.

If you’re deploying AI coding agents, you need:

This is what Cabin Crew’s Pre-Flight Checks provide.

The next supply chain attack won’t be sophisticated. It will be automated.

Are you ready?

Learn more about Pre-Flight Checks or explore the Cabin Crew Protocol.